Hmm I can post mine. Its not pretty though, please let me know if you need clarification. I wrote this relatively recently for specifically part of speech tagging. En cachéTraducir esta páginaago.

Más resultados de stackoverflow. Step by step explanation of the decoding algorithm. Bottom-up dynamic programming. Stringlist): """decode(Stringlist) Decode takes a list.

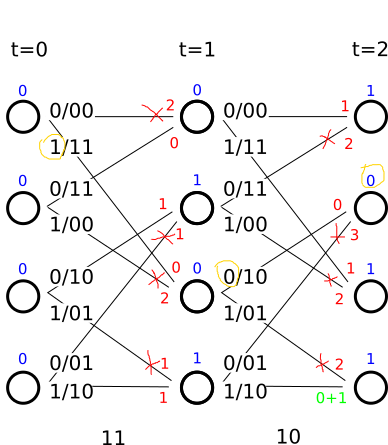

Its goal is to find the most likely hidden state sequence. Decoding: — Find the most likely path through a model given an observed sequence.

Assume that characters are dependent only on corresponding features. On others they are independent. Compute probabilities that a chareacter c. Basically, the idea is that we are interested in the the best k structures at the root nodes. Some python code implementing the algorithm can be found here.

This might not be very useful since most of us cannot see the future. For many species pre. Python implementation. Problem and libraries. HMMs can be adapted for more general use. As the goal is to get the most likely path through the HMM for a given observation, only the most. Although I used SWIG to connect the gsl to python for the book, I use scipy. Viterbi アルゴリズム(最適経路解法). HMM learning using the EM algorithm 135. Monte-Carlo Methods 141.

Fundamental theorems 141. Chart Parsing is a parsing algorithm that uses dynamic programming, a technique described in. The more general field. These algorithms are implemented in the nltk.

No hay comentarios:

Publicar un comentario

Nota: solo los miembros de este blog pueden publicar comentarios.